LLMs for Filtering Jobs -- Part 1

tl;dr:

I annotate some data, and try out Promptfoo to compare some small and small-ish language models.

Why?

I obtained a list of some job postings, but they are nonspecific. Only a small portion of them will be relevant to me, and I don’t feel like reading all of them. Instead I feel like using this as a toy problem for exploring LLM workflows.

What?

For a first exploratory pass at the problem, my thinking was to simply run subsequent “yes or no” queries. Instead of bothering to do any prompt engineering, I ran each query through a couple different LLMs and considered the answers where they both agreed (or, at least, did not contradict). This was surprisingly decent, and would probably work even better if I’d used 3 models and taken the majority decision. For ad hoc jobs without any particular quality requirement, I think that’s an acceptable starting point.

However, I rapidly became curious about all of the complexity that I was shoving under the rug with that approach.

- Which model was best, given identical prompting?

- What if I want to scale a pipeline like this? Wouldn’t I rather not burn 2-3x as many tokens as strictly necessary? Current models are probably trustworthy enough to just use one, for simple queries.

- Speaking of efficiency, how small of a model could I use? Can I get anything useful out of a 1 billion parameter model at 2-bit quantization?

- How complex of a query can I run?

Aw heck. It’s a good day to fall down a rabbit-hole.

Comparing Small Models

Given a relatively unconsidered prompt, how do different models behave? Are they accurate for simple queries? Can we constrain their output to “yes”, “no”, or “insufficient information”?1

For a simple query, let’s try to ascertain whether a job is remote or not.

I started by labeling some of the postings using label-studio.2 Then, I just wrote a quick script to transform my labeled JSON data into Promptfoo assertions.

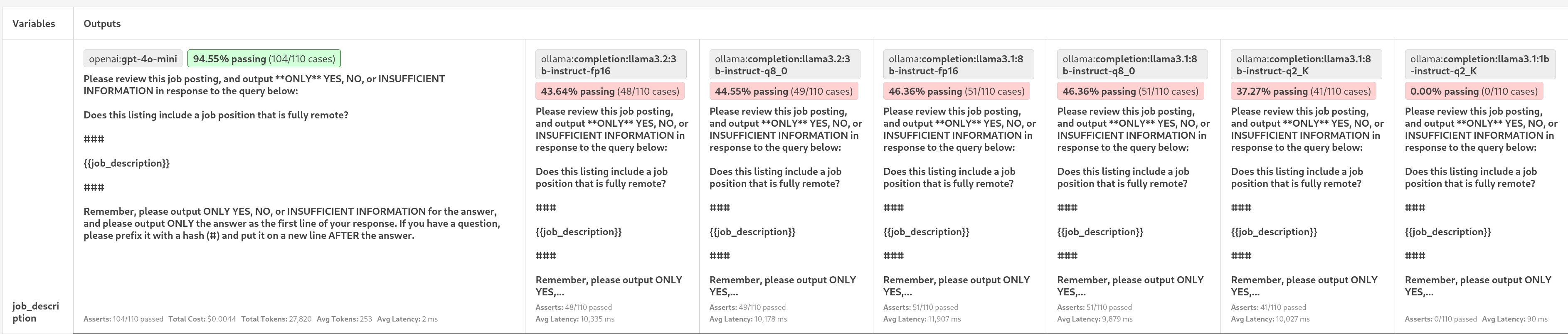

You can see my prompt here, and how several different models and quantizations performed:

We can see that, with my naive prompting, gpt-4o-mini does quite well, and then the various 3b and 8b llamas do pretty poorly. I was curious about the effects of quantization on accuracy in a simple test like this, but it’s too early to tell when they’re all doing this badly.

What’s wrong with the Llamas?

Every one except for the smallest adhered pretty consistently to the requested output format. They just didn’t give the expected responses.

Part of the problem here may be that my data is a bit dirty – there are posts included in it which are not job postings. For labeling purposes, I called these cases “insufficient information”, but they do differ from what I specified I was providing in the prompt. Maybe gpt-4o-mini is just more graceful when the data differs from the exact expectation?

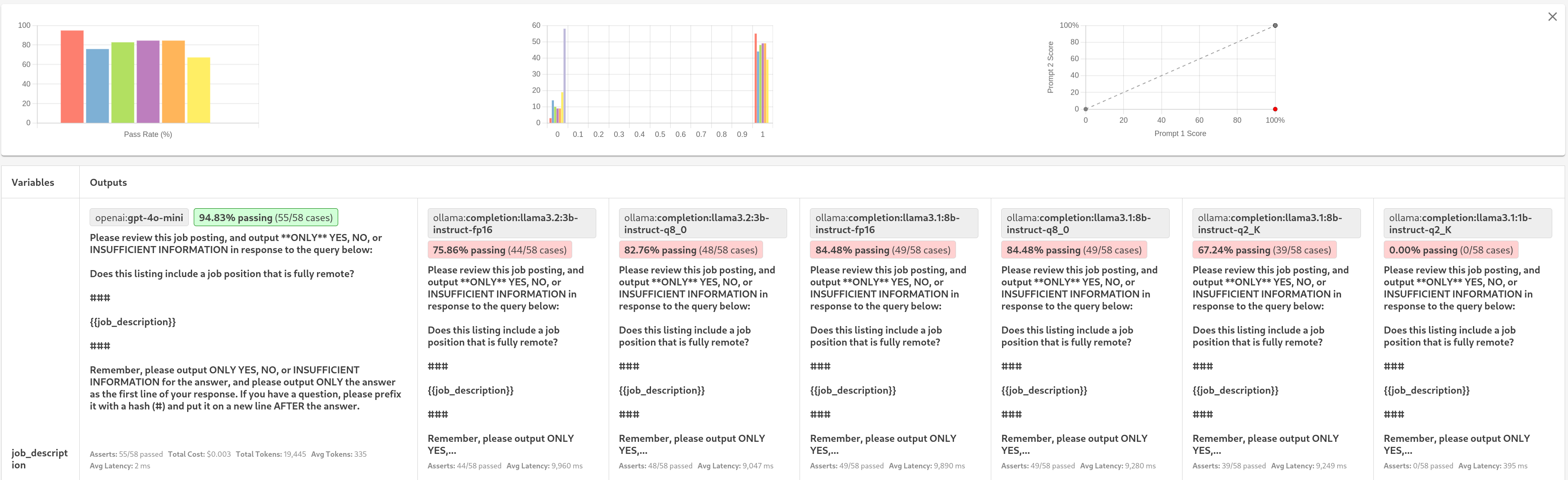

I will remove these from the data and we’ll try the eval again.

Much better! It seems like they just couldn’t handle the deviation from their expected input very well.

I would guess that, with a little prompt engineering to make my exact expectations more explicit, llama3.1:8b could perform similarly in practice to gpt-4o-mini on this task.

That is potentially significant, since gpt-4o-mini is ~4x the price.

| Model | Input Cost (per million tokens) | Output Cost (per million tokens) |

|---|---|---|

| llama3.1:8b | $0.05 | $0.08 |

| gpt-4o-mini | $0.15 | $0.60 |

Comparing Mistral models under 40GB

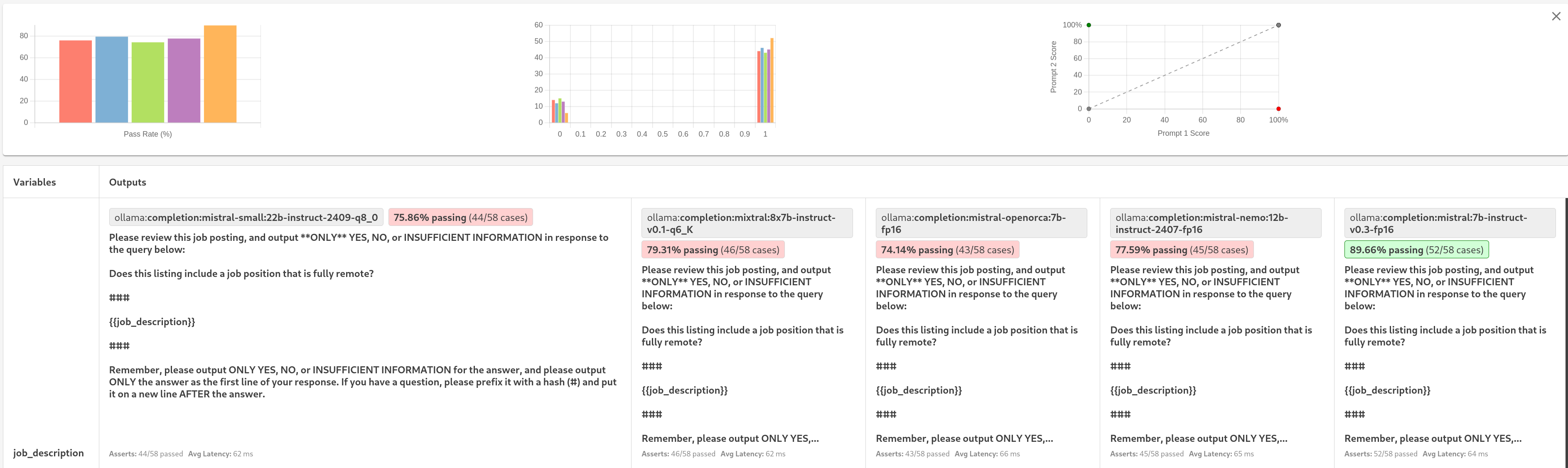

I also tried some Mistral models and one derived from a Mistral model.

It seems like (with the cleaner data) just throwing more parameters at the problem doesn’t do much.

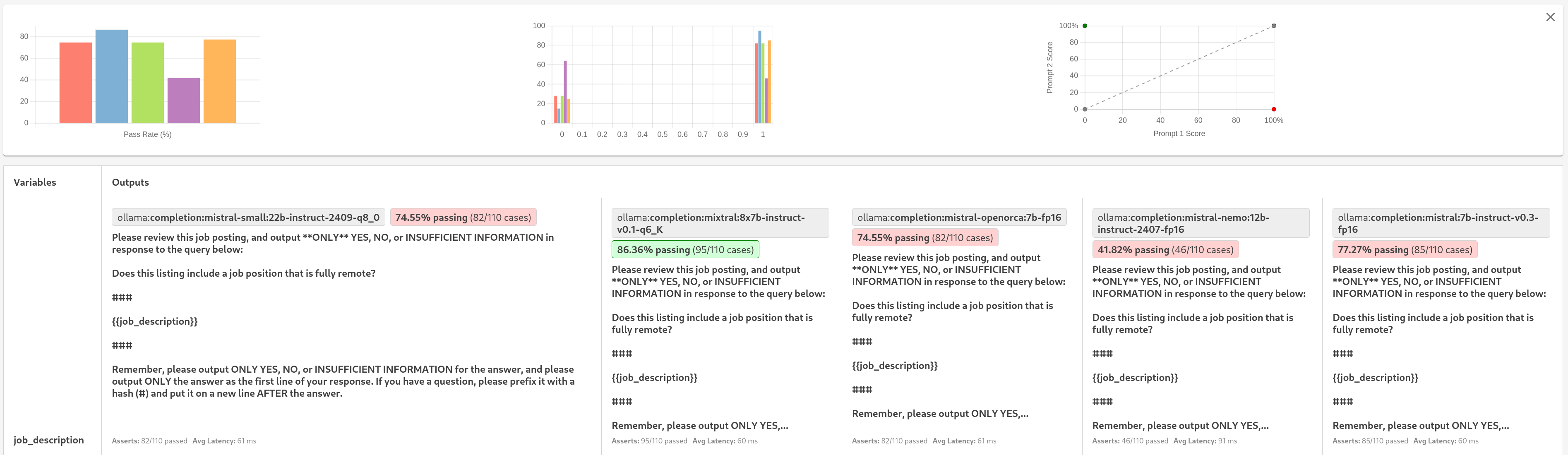

Will these larger models handle the dirty data better?

Just for fun, I will re-run the larger Mistral models against the full set of annotated data. This includes those posts that are not jobs, for which we (perhaps confusingly) are expecting a response of “insufficient information”.

My intuition is that the larger models will be slightly more tolerant of this weirdness and more likely to “infer what I actually wanted”.

At a basic anecdotal level, there seems to be some support for this intuition.

How about a regex?

I also tried to classify job remoteness using simple string-matching. This was ~64% accurate over the same (clean) dataset, and saves a few TeraFLOPs per item.

Using Promptfoo

While writing this post, I tried Promptfoo for the first time. It provides a convenient UI for this type of comparison between models and between prompts. It looks like there’s enough flexibility built in to make it do most of what you’d need it to do in this domain.

I did have one issue with it, though. Given a list of providers, Promptfoo seems to run tests in-order, running the same test with each provider before continuing to the next test. This is much, much slower than running all the tests for each provider serially, if you’re using a single Ollama server that has to juggle the models in and out of memory.

Most of the time is spent loading and unloading models, rather than computing anything.

For a quick workaround, I just commented out all but one model at a time in my promptfooconfig.yaml, then ran promptfoo eval once for each model. Since Promptfoo caches the provider responses, then I could follow up with a final run, with all providers uncommented, in order to generate the nicer, combined report (without actually repeating any provider API calls).

What’s Next?

I want to find some useful queries which larger models can handle, but smaller ones can’t. These will also have to be something that I can label reasonably easily. One obvious low-hanging fruit is to use a model to filter out the posts that aren’t jobs.

Ultimately, I might like to put my entire resume in-context and see if I can filter for jobs that fit based on that. It might also be interesting to follow links from the posts to get longer, more detailed job descriptions when they are available, as well as additional information about the companies.

I'll get into structured output later. This is just the bare-bones basics.

Label Studio is the open-source, core offering of a for-profit company.

It is literally as easy to use as:

`pip install label-studio && label-studio`, which is pretty neat. There are built-in templates for labeling different types of data, so it takes about 5 minutes to get a usable labeling UI with keybinds. Sure, it might have only taken 5 minutes to get Claude to whip up a custom labeling UI, but why do what's been done?